Introduction

Artificial Intelligence (AI) has dramatically advanced in the past decade, primarily thanks to advances in deep learning. Deep learning lets machines discover complex patterns and make decisions based on huge quantities of information, mimicking the brain’s neural structure. human brain. Central to this technology are deep learning models and sophisticated algorithms that drive modern innovations in natural language processing, computer vision, speech recognition, autonomous systems, and more.

With industries increasingly adopting smart technology to improve productivity and accuracy, understanding the way these models function, the types that are available, as well as their broad application is more important than ever before. This article focuses on the fundamental models, concepts, and training methods, as well as practical scenarios, and new developments in Deep Learning models. If you’re a teacher, researcher, developer, or a business executive the comprehensive information in this article provides valuable information on the significance of deep learning in developing AI’s future. AI.

What Are Deep Learning Models?

Deep learning models are special kind of artificial neural networks that are designed to replicate the brain’s capacity to discern and learn. In contrast to traditional machine learning techniques that are used in traditional machine learning, deep learning models have the ability to deal with unstructured information including text, images as well as audio. They are composed of several layers that convert raw inputs into meaningful outputs by understanding patterns in data and the representations. These models are now the core of modern AI systems.

Core Components of Deep Learning Models

Each deep-learning model has a set of basic elements. Neurons are the processors that send signals among layers. Layers are classified as layers of input, hidden layers and output layers with deep models having numerous hidden layers. Functions that activate the network such as ReLU, Sigmoid, and Tanh add non-linearity to the network, which allows it to comprehend complicated patterns of data. Loss function measures the gap between the actual and predicted outputs, which helps guide the process of learning. Optimizers, such as Stochastic Gradient Descent (SGD) and Adam can be used to reduce the risk of loss through updating the weights of the model.

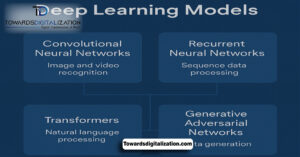

Types of Deep Learning Models

Convolution Neural Networks (CNNs)

Convolution Neural Networks are primarily utilized to analyze visual data. They work through applying convolution filtering to images to find specific spatial characteristics like the edges and texture. CNNs can be extremely effective when it comes to tasks like the classification of images, detection of objects facial recognition and imaging analysis for medical purposes. They typically have convolutional layers, poolsing layers to reduce the dimensional as well as fully connected layers to aid in final classification.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks are designed for data processing in sequential order. In contrast to feed forward networks RNNs keep a record of prior inputs, making they suitable for jobs that require context. They can be used for modelling of languages, time-series forecasting as well as speech recognition. But, RNNs that are traditional struggle with dependencies over a long period because of the vanishing gradient.

Long Short Term Memory Networks (LSTMs)

LSTMs are a more advanced version of RNNs which address the vanishing gradient issue. They make use of memory cells and gates to keep the flow of information over lengthy intervals. They are ideal to be used in such applications as translation of languages Chatbot systems, language translation, as well as the analysis of financial trends.

Gated Recurrent Units (GRUs)

GRUs are a different type of RNNs that are created to be easier and quicker than LSTMs with similar efficiency. They combine gates for input and forget into an update gate that is a single one and reduce the complexity of computation. GRUs are employed in natural languages documents, document summarization, as well as speech Synthesis.

Autoencoders

Autoencoders are neural networks that do not have supervised learning employed for representational learning. They are composed of encoders that compress the input into a hyper-latent space, and a decoder which rebuilds the original data. Autoencoders are frequently used for the areas of image demoising, anomaly detection as well as dimensionality reduction.

Generative Adversarial Networks (GANs)

GANs comprise two networks: one which creates artificial data, and the discriminator which evaluates the validity of the data. In an adversarial manner the generator is able to generate highly authentic outputs. GANs are extensively used for making synthetic images, creating art, deepfake technology and even data augmentation.

Transformer Models

Transformer models have revolutionized the process of natural language through the introduction of the ability for models to use attention mechanisms, which allow them to pay attention to different aspects of input at the same time. In contrast to RNNs which process entire sequences simultaneously, which makes them better suited for longer-term dependencies. Examples of transformer-based models are BERT GPT as well as T5 and RoBERTa. These are commonly used in programs like machine translation to text, summarization of texts, answer to questions, and even code generation.

Training Deep Learning Models

A deep learning model’s training is a process that involves several stages. In the beginning, data flows into the model through forward propagation. This is where the forecasts are created. The loss function measures its accuracy forecasts. In back propagation, the gradients are calculated. They’re then utilized in an optimisation process to adjust the weights of the model to decrease errors. This process repeats over numerous times to boost efficiency.

A common method to boost training include batch normalisation dropping out to stop over fitting the addition of data to improve the diversity of training data, as well as transfer learning to use the models that have been trained for different tasks.

Tools and Frameworks for Deep Learning

Developers make use of various frameworks and libraries to develop efficient deep-learning models. TensorFlow was created by Google is a popularly utilized framework that offers flexible APIs at a low level as well as high-level APIs. PyTorch is highly regarded by researchers, offers flexible computation graphs that are dynamic and allows for an ease of exploring. Keras is an API that’s user-friendly which runs on TensorFlow which makes model-building easier. Other programs include MXNet which is known for its scaling, as well as Caffe designed to perform computer vision tasks.

Applications of Deep Learning Models

Deep learning algorithms have impacted virtually every sector, providing effective solutions for complex issues.

In healthcare, deep-learning aids in diagnosing illnesses as well as predicting the outcomes of patients and identifying novel drugs. In the finance sector, it assists in the detection of fraud as well as credit scoring and algorithms for trading. Retail uses deep learning to make personalized recommendations as well as analysis of customer sentiment as well as forecasting of demand.

Autonomous vehicles depend upon CNNs and reinforcement-learning to recognition things, identify lanes and take driving-related decisions. In entertainment, deep-learning customises the content it produces, creates music and video game characters. The agricultural sector benefits from crop disease identification, monitoring of soil and prediction of yield by using aerial images as well as sensors.

Challenges in Deep Learning

Even with their strengths however, deep-learning models are faced with many drawbacks. They require huge amounts of data that are labeled that can be costly and take a long time to collect. Deep networks training requires a lot of computational power. It is often done using GPUs and specialized hardware, such as TPUs.

A further issue is the ability to interpret. Models are typically operated in a black box, making it hard to comprehend what decisions are made. The issue of overfitting can be a problem when a model is able to perform very well with training data, but fails to perform well on unobserved data. In addition, ethical issues like bias, privacy and abuse (e.g. deepfakes, for instance) have to be taken into consideration.

Recent Innovations in Deep Learning

The latest developments push the limits of deep learning. Multimodal models are being designed to process images, text along with audio at the same time making it possible to build more complex AI technology. Federated learning lets models be refined across various devices, without sharing personal data and thereby enhancing the privacy of users.

The rise of TinyML provides deep-learning capabilities to devices that have small resources, like smartphones as well as sensors. Neural Architecture Search (NAS) is a software that automates the development of network structures, maximising model performance with no manual effort.

The Future of Deep Learning Models

The future of deep-learning will bring even greater breakthroughs. Researchers are advancing towards general AI machines that can comprehend how to think, reason, and adapt over a variety of activities. They will make models more efficient and environmentally sustainable, while reducing effects.

It is clear that explainable AI will be a top priority to ensure trust and transparency for machine decision-making. The collaboration between AI and humans improves productivity and creativity across a variety of fields. In the future deep learning, models for deep learning will soon be an integral component of our lives and power technological advancements that we are yet to envision.

Conclusion

The deep learning model has transformed the field of artificial intelligence. They allow machines to do tasks which previously required human brains. They can do everything from processing the natural language data to processing complex visual data the models are constantly pushing the boundaries of technology.

As the technology evolves the deep learning models improve their efficiency, be more easily accessible and interpretable and will open up new opportunities in all industries. If you’re who is interested in the future of technology, knowing deep learning models isn’t more a luxury, it’s essential.

Frequently Asked Question

Question 1. What is an advanced learning model?

Deep-learning models are an artificial neural network made up of multiple layers, which are adept at learning complicated patterns using massive amounts of data. The models developed for deep learning have been designed to reproduce the brain’s ability to gain knowledge through experiences. They could be employed to complete things like identification of pictures, translation into languages and processing voice.

Question 2. What is the distinction between deep learning in relation to conventional machine-learning?

Contrary to conventional machine learning, which relies on feature extraction by hand and weak models, deep learning is able to learn characteristics of raw data using multi-layered neural networks. Deep learning is specifically designed for dealing with data, which isn’t structured like images, audio and text.

Question 3. Are there the top and most widely utilised deep learning models?

The most commonly used deep learning models are:

-

Convolutional Neural Networks (CNNs) for image data

-

Recurrent Neural Networks (RNNs) and LSTMs for data that’s sequenced

-

Generative Adversarial Networks (GANs) to generate artificial data

-

Transformers that support multimodal tasks and languages

-

Autoencoders for learning without supervision as also compression of data

Question 4. What are the most important components of a deep-learning model?

The primary components include:

-

Neurons (nodes)

-

Layers (input, hidden, output)

-

The functions that activate (ReLU, Sigmoid, Tanh)

-

Loss function (MSE as well as Cross-Entropy)

-

Optimizers (SGD, Adam)

Question 5. What are the practical applications that deep-learning algorithms can provide?

The deep-learning models can be employed in many different sectors which include:

-

Health The purpose of this is to detect diseases, and medical imaging

-

Finance: Fraud detection, risk assessment

-

Autonomous vehicles: self-driving automobiles

-

Retail Systems for Recommendation

-

Security Identification of facial features and the detection of suspicious behavior

Question 6. Do deep-learning models require an immense volume of information?

Deep-learning models generally need a lot of labeled data in order to work efficiently. The more complex the model and task is, the more quantity of data they need for their learning.

Question 7. Do deep-learning models require lots of time to build?

The procedure of creating deep learning models could be extremely complex and highly demanding. This usually calls for powerful technologies (like GPUs) and accurate data processing, and changing many parameters. Frameworks such as TensorFlow and PyTorch aid in simplifying the procedure.