Introduction to the EU AI Act

The European Union’s Artificial Intelligence Act, also known as the EU AI Act, is a landmark legislation aimed at regulating the development and use of artificial intelligence technologies within the EU. This comprehensive regulation is designed to ensure the ethical and responsible use of AI, while also fostering innovation and competitiveness in the European digital market.

The Genesis of the EU AI Act

The EU AI Act is the result of extensive discussions, consultations, and collaborations among EU member states, industry stakeholders, and experts in the field of artificial intelligence. The need for a harmonized approach to AI regulation became increasingly apparent due to the rapid advancements in AI technology and the potential risks associated with its misuse.

What is the EU AI Act?

In addition to regulating high-risk AI systems, the EU AI Act also aims to establish a common European approach to AI, fostering innovation and competitiveness in the region. By setting clear requirements for AI systems, the Act seeks to increase trust among both consumers and businesses in the use of AI technologies. Furthermore, the Act promotes ethical considerations in the development and deployment of AI, aligning with the EU’s values and principles.

AI systems must follow safety, privacy, and fundamental rights principles through proper data governance, risk assessment, and compliance measures.

Exploring the Core Objectives

First and foremost, the core objectives of the EU AI Act are to promote trust and confidence in AI systems. Additionally, the Act aims to protect individuals’ rights and freedoms. Moreover, it strives to support the development of AI technologies that benefit society as a whole.

By setting clear rules and standards for the use of AI, the EU aims to create a competitive and thriving digital economy while safeguarding the well-being of its citizens.

The Impact of the EU AI Act

Additionally, the EU AI Act will impact AI development and deployment in the European Union. This comprehensive legislation aims to regulate AI applications to ensure they adhere to ethical standards, protect fundamental rights, and promote innovation. By setting clear guidelines and requirements, the EU AI Act seeks to create a more transparent and accountable AI ecosystem.

Regulatory Implications for AI Development

Firstly, the EU AI Act creates a regulatory framework that categorizes AI systems based on their risk levels. Additionally, this classification system categorizes AI applications as unacceptable risk, high risk, limited risk, and minimal risk. High-risk AI systems will be subject to strict requirements, including compliance assessments, record-keeping obligations, and transparency measures. Developers of high-risk AI technologies will need to demonstrate adherence to data protection rules, algorithmic transparency, and human oversight.

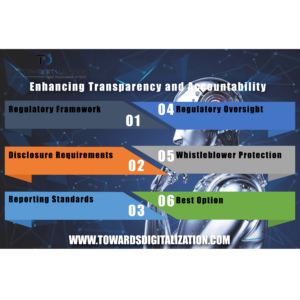

Enhancing Transparency and Accountability

The EU AI Act places a strong emphasis on transparency and accountability in AI development and deployment. Additionally, developers must implement mechanisms for human oversight and intervention to prevent biased or discriminatory outcomes. The EU AI Act promotes transparency and accountability in AI technologies to ensure ethical and responsible use.

Sector-Specific Impacts

The EU AI Act will have sector-specific impacts on various industries, including healthcare, finance, and automotive. Each sector will need to comply with specific requirements and guidelines tailored to their unique characteristics and challenges.

Healthcare

The EU AI Act will impact the use of AI in healthcare for diagnosis, treatment planning, and patient care. Healthcare providers will need to ensure that AI systems used in clinical settings are reliable and accurate and respect patient privacy. Additionally, they must demonstrate the effectiveness and safety of AI applications to guarantee patient outcomes and regulatory compliance.

Finance

The EU AI Act will cause significant changes in the finance sector, especially in risk assessment, fraud detection, and customer service.

AI-powered financial institutions must protect consumer data and mitigate risks while using AI algorithms for credit scoring, investment recommendations, or compliance monitoring. Transparency and explainability will be crucial in ensuring the accountability of AI systems deployed in financial services.

Automotive

Additionally, in the automotive industry, the EU AI Act will impact the development of autonomous vehicles, predictive maintenance systems, and driver assistance technologies. Furthermore, manufacturers and developers will need to address safety concerns, ethical considerations, and liability issues related to AI-driven vehicles. Regulatory compliance and certification are crucial for safe and reliable AI-powered automotive solutions.

Navigating Compliance and Challenges

Navigating the European Union’s new Artificial Intelligence Act can be a daunting task for businesses and Organizations. To regulate AI systems to ensure they are safe, transparent, and accountable, the EU AI Act introduces a set of compliance requirements and challenges that must be carefully addressed.

Understanding Compliance Requirements

To comply with the EU AI Act, organizations need to understand the various requirements set forth by the legislation. AI systems must ensure safety and protect individuals’ fundamental rights.

AI transparency means clear and traceable decision-making by algorithms. This is essential for users and stakeholders to have confidence in the system’s operation and results. Additionally, Accountability in AI ensures clear responsibility for outcomes and holds individuals/organizations accountable for any negative impact. Transparency and accountability measures help regulate and monitor AI systems for proper functioning and ethical use.

Strategies for Overcoming Implementation Challenges

Implementing the compliance requirements of the EU AI Act may pose several challenges for organizations. To improve AI systems, conduct thorough assessments to identify areas that need improvement. Organizations can also invest in AI governance frameworks and tools to help ensure compliance and mitigate potential risks. Moreover, collaborating with AI experts and regulatory bodies can provide valuable insights and guidance on how best to navigate the implementation process.

The Role of Continuous Monitoring and Adaptation

Continuous monitoring and adaptation play a crucial role in maintaining compliance with the EU AI Act. Organizations should establish processes for continuously monitoring the performance and outcomes of their AI systems to identify any potential issues or violations. Regularly reviewing and adapting AI systems helps organizations comply with legislation and uphold safety, transparency, and accountability standards.

Conclusion

In conclusion, The EU AI Act will impact AI technologies in healthcare for diagnosis, treatment planning, and patient care. By setting clear rules and guidelines, the Act aims to ensure the responsible and ethical development of AI systems. Moreover, as businesses and individuals navigate the evolving landscape of AI, understanding the implications of this legislation is crucial. Additionally, embracing transparency and compliance with the EU AI Act can pave the way for a more trustworthy and sustainable AI future for all.

If you want to read more informative articles like this please visit TOWARDSDIGITALIZATION.COM